7 Ways to Avoid Loading Speed Issues in A/B Testing

You’re doing A/B split testing to improve conversion rates, but A/B testing tools may actually slow down your website, increasing your bounce rate and affecting your search engine ranking.

A/B Testing tools – including Google Optimize – can cause a white page effect before loading the variation, known as A/B Testing Page Flickering.

Page Loading Speed & A/B Testing

Site loading speed affects search engine rankings and conversion rates. A one-second delay in page loading typically results in 7% fewer conversions and 11% fewer page views.

Visitors can identify images in as little as 0.13 milliseconds and the Page Flicker associated with slow loading times can seriously affect user experience. It can also lead to lower search engine rankings.

Our solution has been to create a Lightning Mode that is always ON and makes our Javascript “Pixel” lighter and much faster than alternative software.

7 Way to Increase Page Speed in A/B Testing

Our pixel is now installed on thousands of sites. With over half a million requests per day, we have been presented with two challenges:

- How can we ensure our pixel is served as fast as possible

- How can we ensure our pixel runs an experiment as fast as possible

This article will give an overview of the technologies and concepts used in order to answer these questions. We conclude with the AB Testing benchmark we established, showing that our pixel provides now up to 12.8 times faster AB Testing than a well-known alternative platform.

Our engineers and developers found 7 key ways to optimize our Pixel, ensuring it does not affect page loading speed.

The pixel works with core Javascript instead of 3rd party libraries like JQuery to keep the file small and to ensure it will work well in all browsers/devices supporting Javascript.

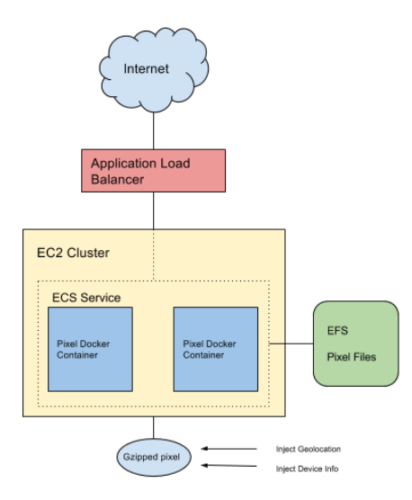

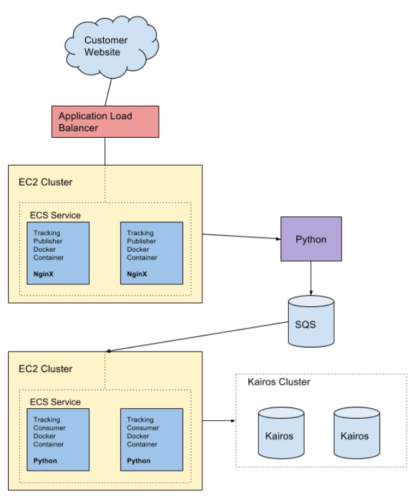

As our customer base grew we knew it was time to re-architect our server infrastructure. We migrated from a Google Cloud environment to Amazon Web Services, at the same time introducing docker containers and autoscaling.

The pixel is served by a set of containers managed by an Application Load Balancer (ALB) in an ECS Cluster. The container itself runs NginX to serve the Javascript files from EFS storage and Gzip them to reduce the size further.

We will write about our move to AWS in another article and how it now allows us to handle any amount of traffic by scaling our services up automatically when required. Here is a diagram showing an overview of the AWS setup in regards to serving the pixel.

Figure 8. Server architecture of Pixel setup

A webpage contains many assets (images, Javascript, CSS) which all must be loaded before the page is ready to be presented to the user. The Convertize pixel loads as fast as possible by ensuring:

- All data, code and css required are embedded into a single file. Browsers can only handle so many concurrent connections per domain so the less HTTP connections the better.

- Any user detection information such as Geolocation and Device Detection are injected directly into the pixel by NginX so we don’t have to make further 3rd party API calls.

A user can enable or disable certain features for their experiment. For example, they may choose to modify the page only, or also add a SmartPlugin™.

To keep the pixel download size as small as possible we decided to take into account what is actually trying to be done in the experiment and only download the code necessary.

For example, as soon as the user turns off all experiments, the pixel becomes 0-byte in size. If they don’t use any SmartPlugins then the code for those plugins will not be part of the downloaded file.

In order to avoid the FOOC issue (Flash of Original Content, also called Flickering effect), the pixel will briefly hide the content of the page until all SmartEditor™ modifications have been made.

Is this always necessary? What if the only change being made was to add a SmartPlugin™ like Scarcity onto the page? In this instance, there is no need to hide the original content because it is not being modified, we are only displaying a notification on top of the existing page.

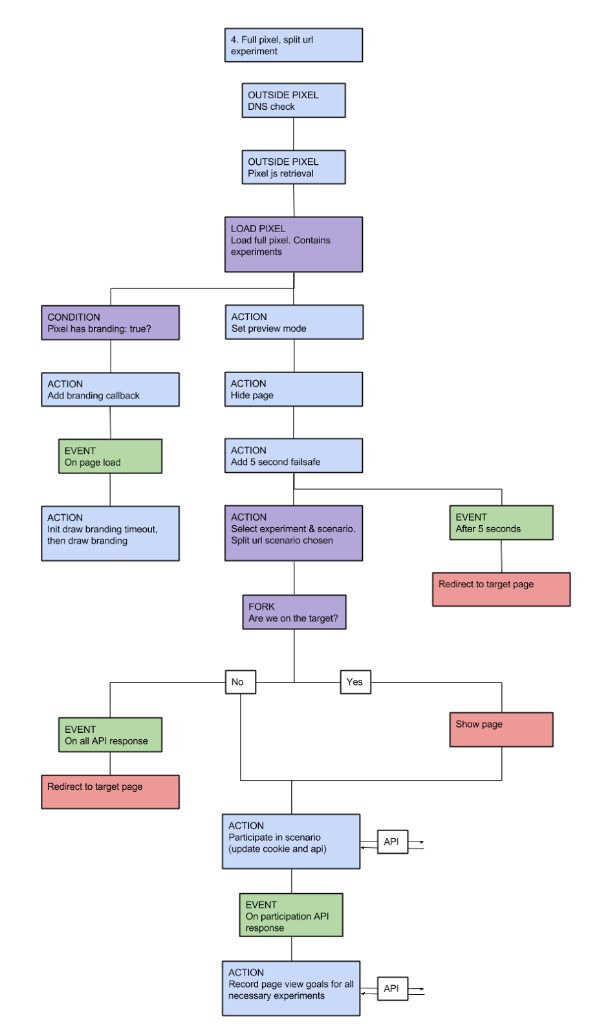

The pixel is smart enough to detect the type of changes required for the experiment and perform as optimally as possible, ensuring the user will see the page appearing as soon as possible. Following is an example flow the pixel will follow to apply a split url variation.

Figure 9. Pixel flow for Split URL variation

When an experiment is loaded onto a page, one of the tasks of the pixel is to record goals. This is accomplished by sending a HTTP request for a blank image with parameters to the tracking server and then listening for a successful status code.

It is important that these requests are non-blocking and aysnchronous where possible, to ensure the page load speed isn’t afffected and that the tracking happens behind the scenes without the user being aware. Following are examples of optimizations made by the pixel in relation to goal tracking:

- Page View goals are handled only when the experiment has been loaded into the page and the page has been displayed to the user. This is because the user experience has a much higher priority.

- When sending these tracking requests, we don’t need to wait for a response unless the variation being applied is a Split URL variation, in which case we must make sure all tracking was recorded successfully before redirecting the user.

This is an area that has improved greatly to ensure backend processing of conversion data happens as efficiently as possible considering the high number of requests being sent per day.

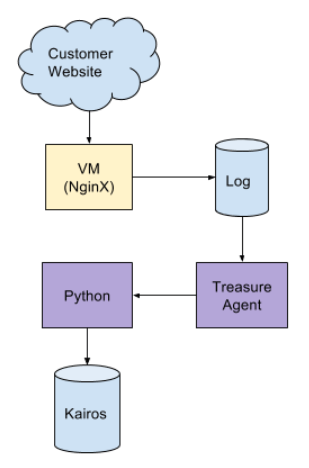

With our previous setup we had an NginX server listening to conversion requests and logging the data into a file which was read by a Treasure Agent Daemon. The daemon would parse the log data for conversion and experiment participation entries and then spawn a script to insert the conversion into KairosDB (a timeline series interface to Apache Cassandra).

NginX was configured with the ngx_http_userid module to create 3rd party cookies automatically in order to identify clients by assigning them a unique identifier. The conversion script would query KairosDB with this unique identifier and determine whether the conversion was new or not (this is especially important to identify new vs returning visitors).

Following is a diagram showing our previous setup.

Figure 10. Old architecture for processing conversions

What we learnt as traffic grew was that this setup could not scale. Here were some of the problems we faced:

- Storing and querying KairosDB for unique identifiers was not a scalable solution. The more conversions we had, the slower the system became.

- Using Treasure Agent in this way was limited by the speed at which it could parse log files. We were unable to scale this out as the log entries had to be read in sequential order to handle cross-domain tracking.

After our engineers thought about what we needed to accomplish, we re-architected a solution that would automatically scale in our AWS infrastructure and apply conversion processing 200 times faster.

Our new solution has said goodbye to Treasure Agent and the NginX module for visitor identification. When the pixel will send a tracking request to the server, it is retrieved by NginX running inside a scalable docker service, which will proxy this through to a python WSGI application.

The application will now store all experiment participation and previous conversion data into a 3rd party cookie, meaning that identification of new vs returning users and conversions is done instantly without the need to query a database.

If the conversion is one we wish to track, it will be sent to an Amazon SQS queue for it to be handled by consumer python scripts that will pop items off the queue and process them, inserting data into KairosDB. As there is no querying to perform anymore for uniqueness, we simply insert data and write operations in KairosDB are lightning fast. We improved this speed further by creating a KairosDB and Cassandra cluster behind load balancers.

Here is our new setup showing the interaction between the browser making the conversion request and it finally getting tracked.

Figure 11. New architecture for processing conversions

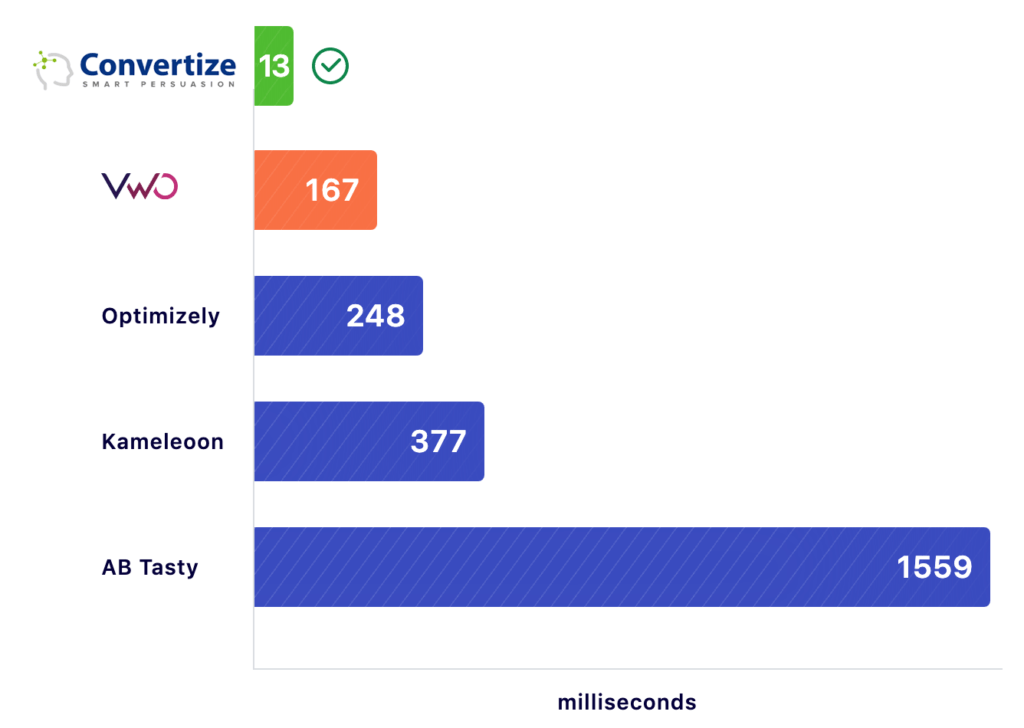

UPDATE August 2021: VWO have recently published a benchmark of loading Speed on their home page. This is how we compare: Convertize is still 12.8x faster than VWO and 120x faster than AB Tasty.

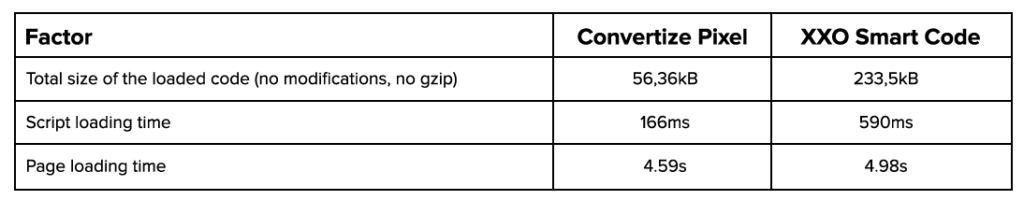

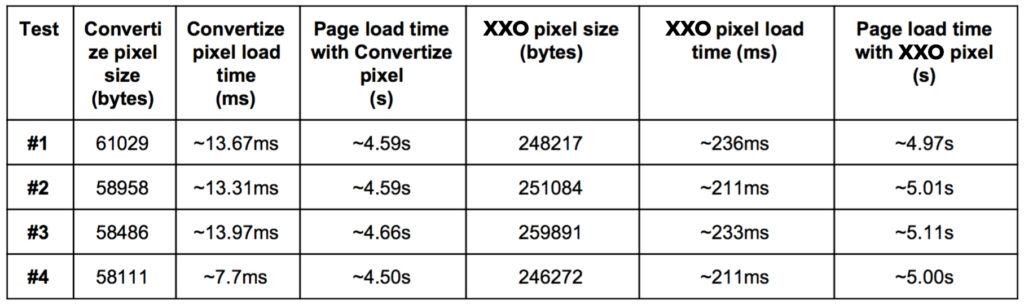

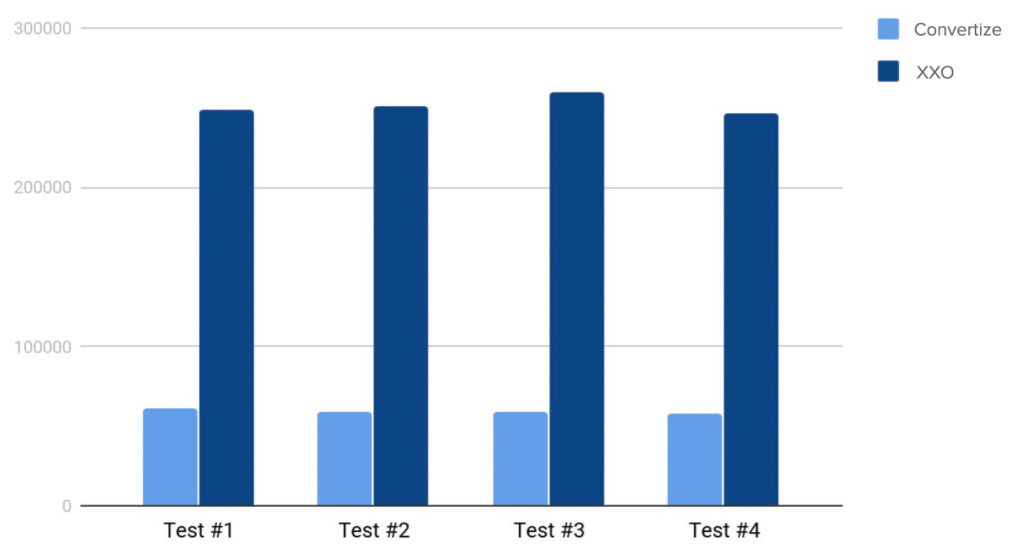

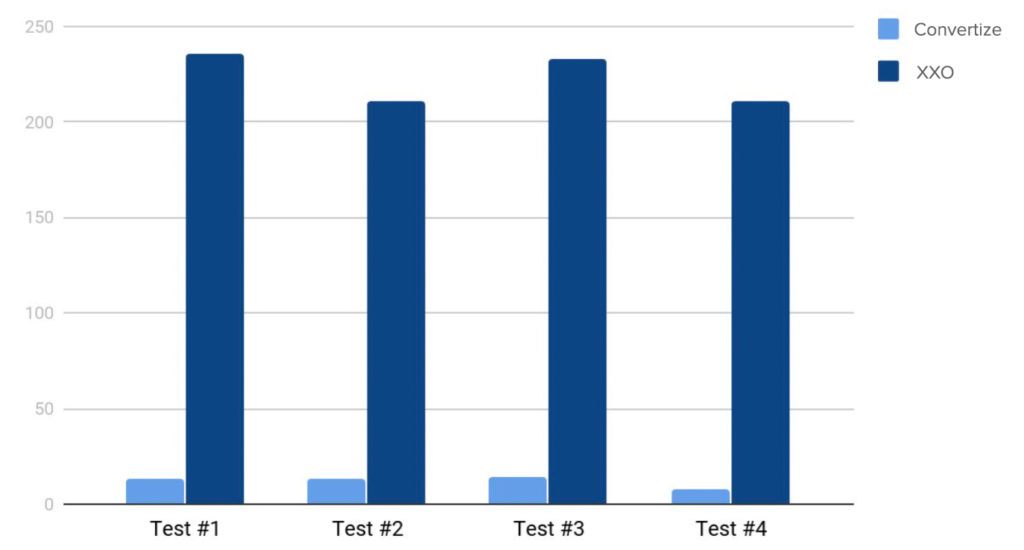

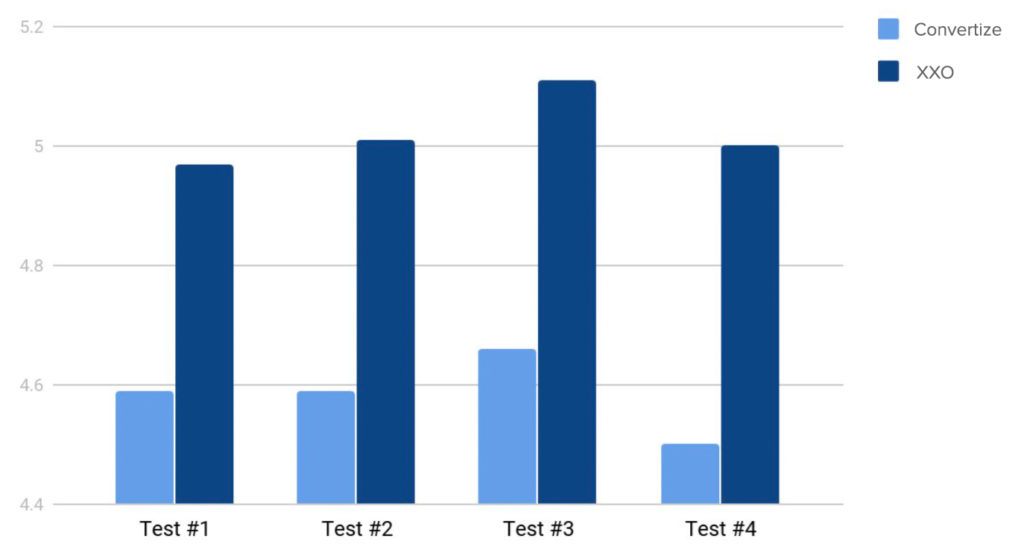

In 2017, we conducted a loading and speed benchmark against our competitors (for propriety sake we have omitted their name and use XXO throughout instead).

This is how we proceeded:

We first provide a benchmark against pixel size including just core functionality. The size affects how much data must be downloaded before your A/B test code can begin to run. Following on from this, we will make some different A/B tests, the typical modifications a user would make on their site, and compare 3 results:

- Pixel Size – How much data is being downloaded

- Pixel Load Time – How much time it takes to download and run the pixel code

- Page Load Time – How long before your A/B test has been applied and the variation is displayed

Basic A/B testing benchmark information

- Internet connection speed: 20Mbps

- Website size: ~1223KB

- DOM tree size: 732 elements

- Page loading time has been tested using GTMetrix tool

- Original page load time (without any pixels): 4.40s

- GZip compression disabled

Loading all pixel code with no modifications

Our Pixel has a few key advantages compared to the competition:

- It doesn’t load any additional resources (JS/CSS) so its size is very small (56kB for all code). It requires only one HTTP request to our servers. XXO sends at least 4 different requests which has bad impact on the loading time.

- It virtually doesn’t slow down customer’s website. Loading time is very close to the original loading time

- It’s easy to install – one simple tag vs piece of JS code (XXO)

Comparison benchmark

Loading Speed Test #1

Benchmark steps:

- Modify text of a 676 bytes long paragraph

- Insert new paragraph with 128 bytes of text

- Change content of the inserted paragraph (extend content with additional 600 bytes of data)

- Advanced HTML action on the 4645 bytes long container

- Add attribute to the element A (data-attribute=”test”)

- Change style for element B (add color and background color)

- Remove DOM element (40 bytes long)

- Rearrange elements

- Insert new image

Loading Speed Test #2

Benchmark steps:

- Modify text of element A – remove 100 bytes

- Modify text of element A – add 50 bytes

- Modify text of element A – replace content with new text (128 bytes)

- Advanced HTML on element A – add H1 (30 bytes)

- Remove element A

- Undo changes

- Modify text of element A – add 1024 bytes

Loading Speed Test #3

Benchmark steps:

- Rearrange element A after element B

- Rearrange element A after C

- Rearrange element A after D

- Rearrange element A before element B (original position)

- Advanced HTML on the parent of element A

- Rearrange element A after element B

- Add attribute to element A

- Change style of element C

- Change color

- Change background-color

- Add border

- Use advanced CSS editor for element C

- Remove all properties (should be original state)

Loading Speed Test #4

Benchmark steps:

- Modify text of element A

- Modify text of element A

- Modify text of element A

- Revert original text of element A (Pixel should do nothing)

Loading Time Results

Pixel size

Pixel load time

Page load time

You can see out of these tests that our pixel is now up to 17.2x faster than XXO, which is massive difference!

About the future

In this article, we have outlined 7 ways we used to optimize our A/B Testing Pixel.

This development signifies enormous improvement and innovation to our optimization platform, allowing our solution to work smarter and faster than any other optimization product on the market. And yet, we are aware that this is still just a stepping stone along the way to perfect optimization.

Also read How we made our Visual Editor Faster and Smarter.

Author note: I would like to thank David and John for their hard work and endless knowledge. Without their extensive contribution none of this would have been possible.