What is A/B Testing? Definition:

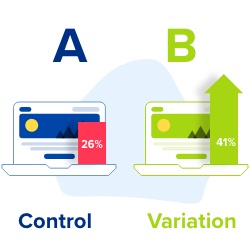

A/B Testing lets you compare the conversion rates of two versions of a page by showing half your visitors the current version of your site (the ‘Control’) and the other half the modified page (the ‘Treatment’). By comparing which version got more signups, sales or any other metric, you can determine which version is more effective.

By running A/B tests you can verify hypothesis with statistically significant data from an experiment done with real visitors, rather than making uninformed decisions based on intuition.

Successful brands use A/B Testing as a constant process to guide their web development. However, since the introduction of tools like Optimizely and VWO in 2010, A/B testing has also become an important part of digital marketing.

How Does A/B Testing Work?

In order to perform effective tests, you need a “hypothesis”, a way to edit your site, and a tool to record the results. Your hypothesis is simply your idea about how to improve your webpage. This might be changing the location of a call-to-action, the layout of a page, or even the colour of a button.

A/B testing software monitors and records the effect of the changes on your visitors’ behaviour. It divides traffic between the ‘treatment’ and the ‘control’ and measures the different responses. More sophisticated tools even send more visitors to the best-performing page, so you don’t lost out on customers whilst your test is running.

Once your site has received enough visits, your software will declare a winner. However, there is another important step to make before the changes can be made permanent. Analysing the statistical significance of your data is a crucial part of the A/B testing process.

A/B Testing In 30 Seconds (Animated Video)

As with any other kind of test, human error frequently interferes with otherwise convincing results. So, if you are considering performing A/B tests on a website, make sure you avoid falling into the two most common mistakes:

- The classic problem of confirming your own opinions.

- Trying to draw conclusions without having enough traffic, time or uplift (this is the most common A/B testing mistake)

Whilst most A/B testing tools will do all the calculations for you, it is still worth taking some time to get familiar with A/B testing statistics.

A/B Testing vs Multivariate – What is the difference?

A/B testing involves a single variable (ie. a call-to-action button) with two different versions. When a test involves multiple changes, it can be either an “A/B/n test” or a “Multivariate Test”. Unlike an A/B/n test, Multivariate (MVT) testing shows you how different variables work together and which ones have the biggest influence on your conversions.

What Is A/B/n Testing?

Testing multiple versions of a particular element is known as A/B/n Testing. Supposing you want to try three different colours of button, the page versions would be A, B and C. Because you can add any number of different versions, this kind of test becomes A, B and “n“.

What Is Multivariate Testing?

Multivariate testing works the same way, but compares more than one variable both separately and in combination. That gives you information on how each individual version works and how different variations work together.

For example, testing alternative versions for two separate elements (a call-to-action button “X” and a header image “Y”) would mean comparing four combinations. That would give you the following combinations of variables:

- A – Y1 and X1

- B – Y2 and X1

- C – Y1 and X2

- D – Y2 and X2

Multivariate testing requires an exceptionally large sample size, so it is only really possible for the largest websites.

What is Split Testing?

Split testing is the same as A/B testing, except the two pages, A and B, are assigned their own URLs. This makes the loading speed of the pages faster, and allows for more extensive changes. However, it can also be a more complicated process and there is a larger possibility of contaminated data.

A/B Testing Examples

A/B testing is a form of “Statistical Hypothesis Testing” – a technique that emerged in the early 20th Century. Scientists such as Ronald Fisher, Karl Pearson and Jerzy Neyman used the technique in their experiments, establishing concepts like the Null Hypothesis.

In the world of marketing, copywriters such as Claude Hopkins applied these new concepts to advertising. Hopkins used the return rate of promotional coupons to measure the impact of different campaigns. He described his technique in a book called Scientific Advertising (1923).

With the development of internet advertising and eCommerce, it became possible to automate testing on marketing and UX designs. A/B testing has been central to the success of countless online businesses and has played a part in major historical events.

Examples of Successful A/B Tests

Since the turn of the century, A/B testing has become a key resource for SaaS, eCommerce and business websites. Because it is easy to sample and track a website’s visitors, most users never realise that they are part of a test.

- 2000: engineers working for Google ran a test to find the optimum number of results to display on a search engine results page. The answer (10 results per page) has remained relatively consistent since then.

- 2008: the presidential campaign for Barack Obama tested an early campaign donor page, identifying a combination of CTA copy and images that produced 40% more clicks. Similar tests were responsible for a reported 4 million additional sign-ups and $75 million in extra campaign donations.

- 2009: an employee for Microsoft designed a type of link that would open pages in a new tab. The total number of clicks on the MSN homepage increased by 8.9%, representing a huge increase in user engagement. The test involved over 900,000 UK participants and was repeated (with similar results) in the US in June 2010.

- 2009: Google ran a test of over 40 colour combinations for their search results page, settling on a combination that is believed to have earned them an extra $200 million annually.

- 2012: An A/B test on the format for ad headlines produced 12% additional revenue from Microsoft’s Bing search engine. That translated into an additional $100 million a year.

- 2013: Microsoft tested alternative colours for titles and captions on its search results pages. Because the results were so positive, the company repeated the experiment on a larger sample of 32 million users. When the changes were introduced, they were shown to have produced an extra $10 million of revenue annually.

Today, companies like Google, Microsoft, Amazon and Booking each run tens of thousands of tests annually. Despite the relatively minor impact of most tests, they remain the most reliable way to improve website performance.

#1 – A/B Testing Titles and Subtitles

The advertising great David Ogilvy explained the importance of a strong ad headline in his Confessions of an Advertising Man:

On the average, five times as many people read the headline as read the body copy. When you have written your headline, you have spent eighty cents out of your dollar… If you haven’t done some selling in your headline, you have wasted 80 per cent of your client’s money.

Titles and subtitles take an even greater share of attention online, so it makes sense to test them. In fact, if you haven’t tested your headline, you are gambling with over 80 per cent of your PPC budget.

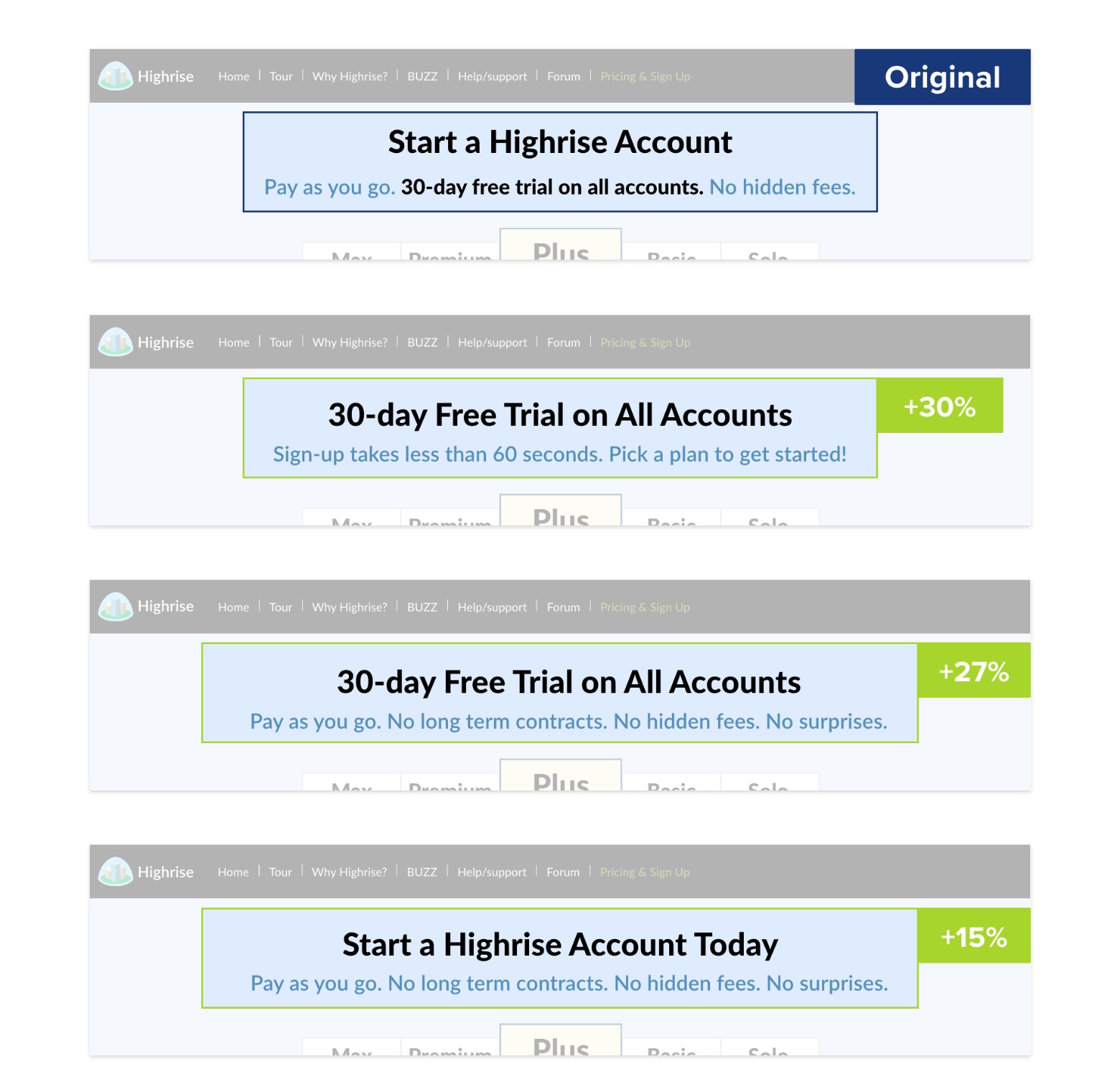

One of the most famous A/B tests from the past 10 years was a simple lead generation sign-up page for the CRM software Highrise. The test compared four different title and subtitle combinations, including the original.

Although Highrise used a small sample for their test, they were confident with the outcome of their experiment. The page title which gave the length of a free trial and explained how easy it was to sign up converted 30% more frequently than the original.

#2 – A/B Testing Website Notifications

Website notifications are a unique form of sales copy. They can display live data or draw attention to hidden details, acting as a powerful “Booster” for your Value Proposition. Like titles and subtitles, notifications typically receive far more attention that standard body copy.

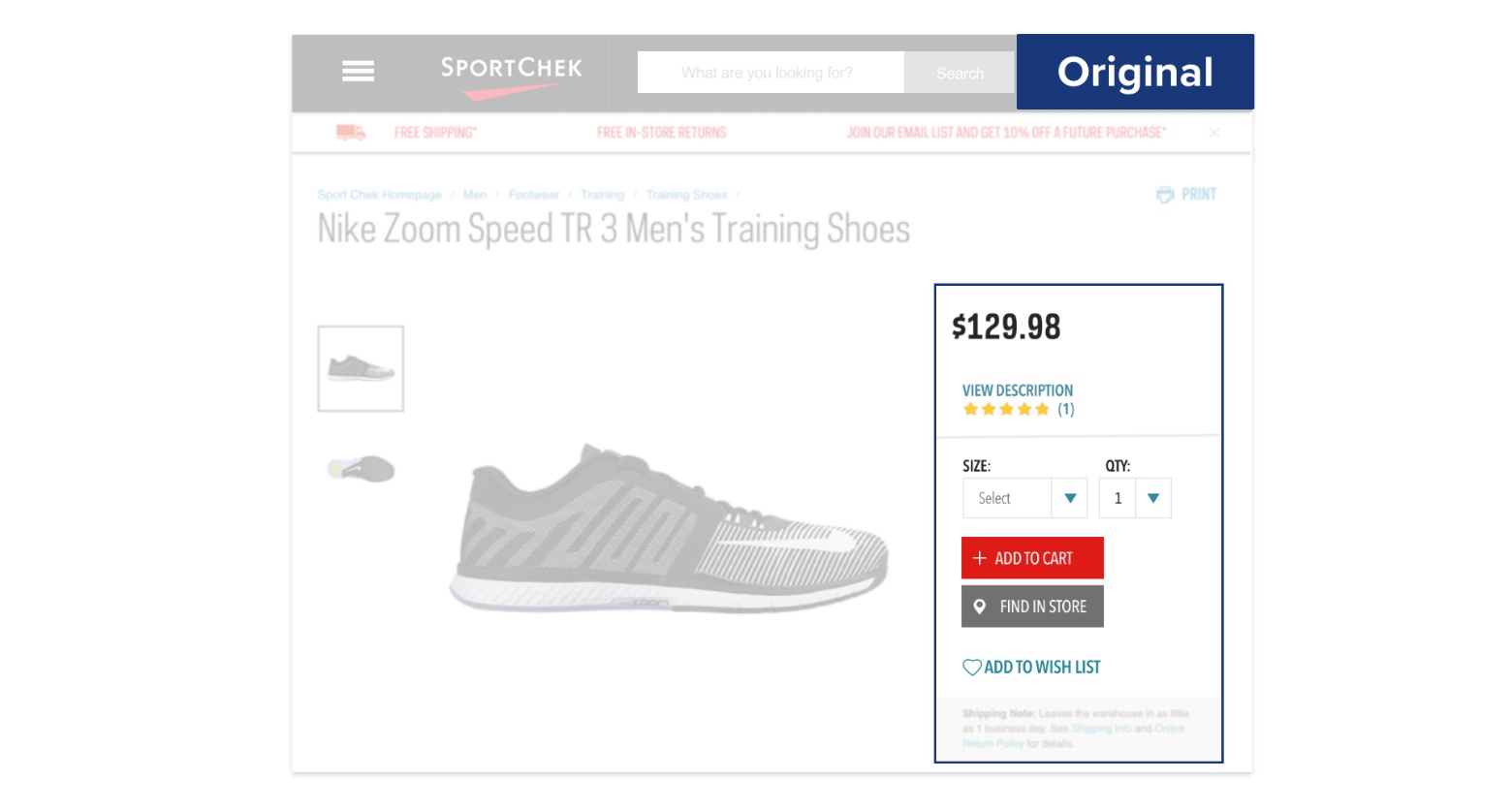

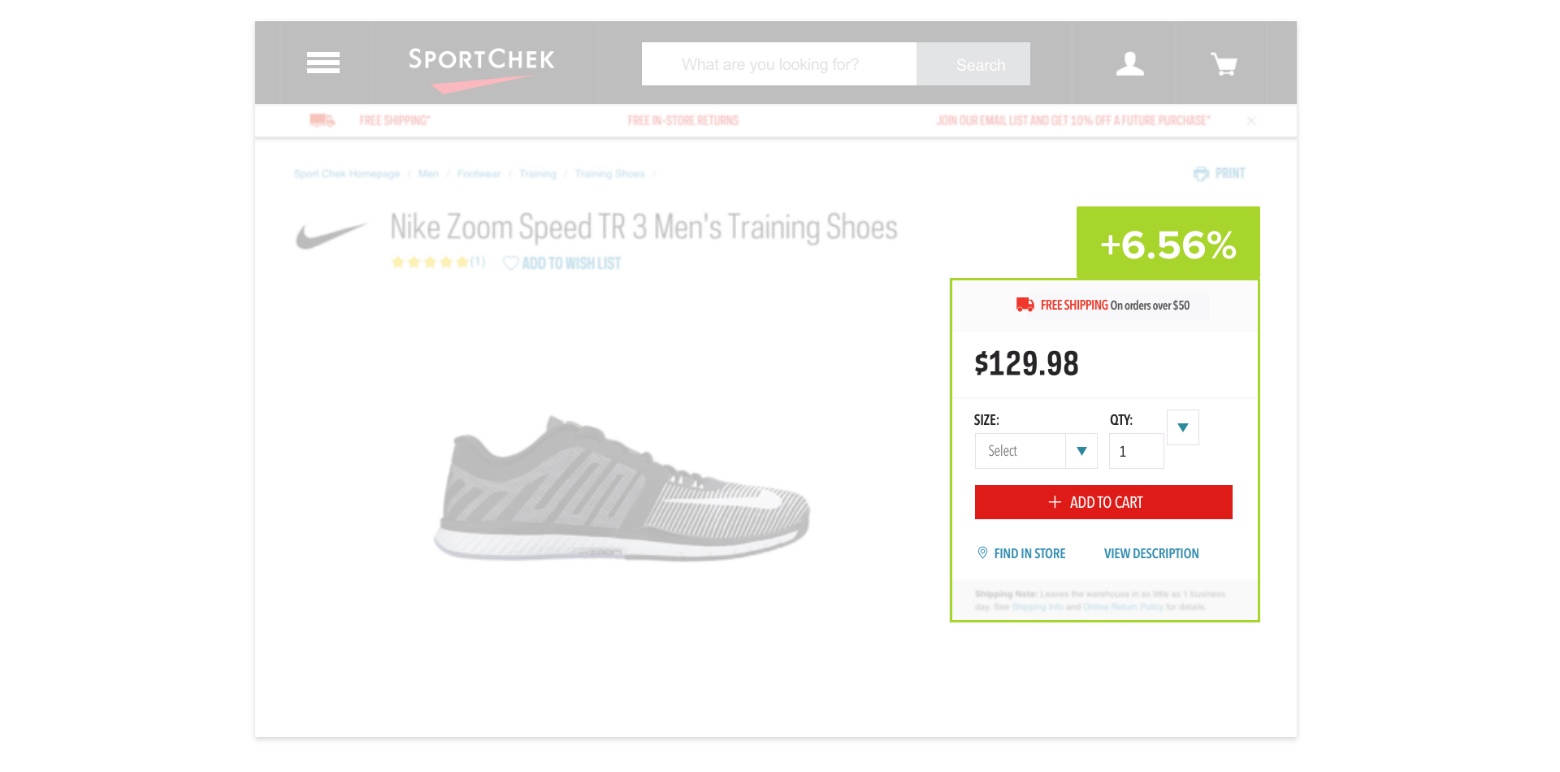

Displaying shipping details early in the checkout process helps to reduce abandoned baskets. In 2019, the eCommerce platform SportCheck experimented with ways to advertise their free shipping policy on product and basket pages. However, the results were disappointing. Adding a notification to their product pages increased sales by a tiny margin, and the test had only reached 55% Confidence.

Exploring the data more carefully, the Director of Experimentation discovered that sales from the test pages had begun to decline on 29 July. On that date, the store’s free shipping policy had been updated so that it only applied to orders over $75. Before the update, the notification had increased sales by 6.56% with 96% Confidence.

#2 – A/B Testing Images

Testing your content allows you to overcome implicit biases. For example, staff within the 2007-8 presidential campaign for Barack Obama found that their instincts about which images would work best were often incorrect. Because images provoke emotional and tacit responses, it’s important to validate your choices with evidence.

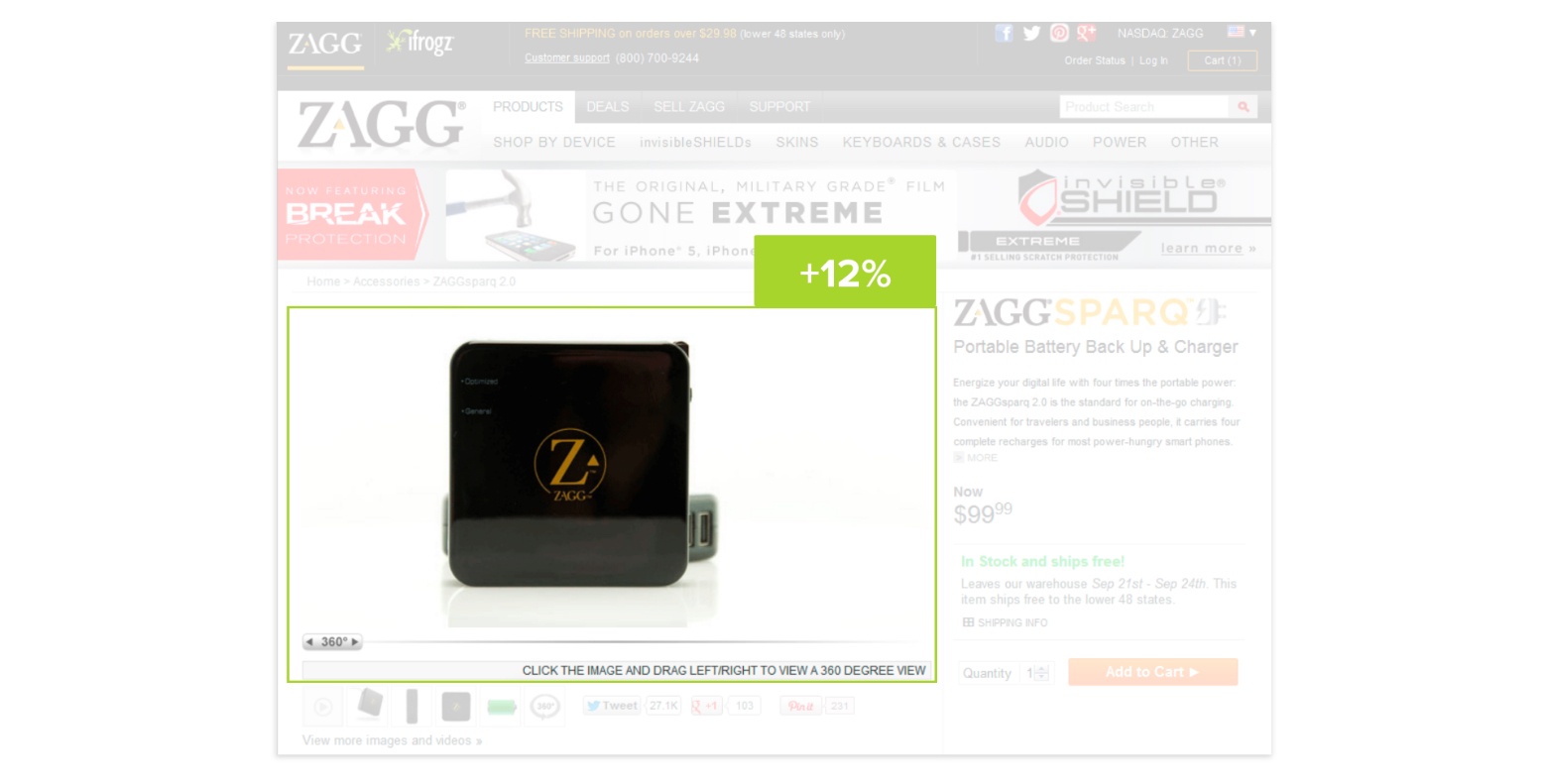

Most agencies would recommend using a video as the first image on a product page. However, in this A/B test, conducted by the mobile accessory retailer Zagg, a 360-degree image outperformed the product video by 11.9%.

The test measured the average revenue per customer, so that low-value sales could not affect the outcome. The results were significant to a Confidence Level of 95.4%.

#3 – A/B Testing Web Forms

The infamous “$300 million button” case study demonstrates the value of testing forms. One of the major obstacles faced by web designers is the “Curse of Knowledge”. It’s difficult to create user-friendly experiences because designers already know how their forms and processes work.

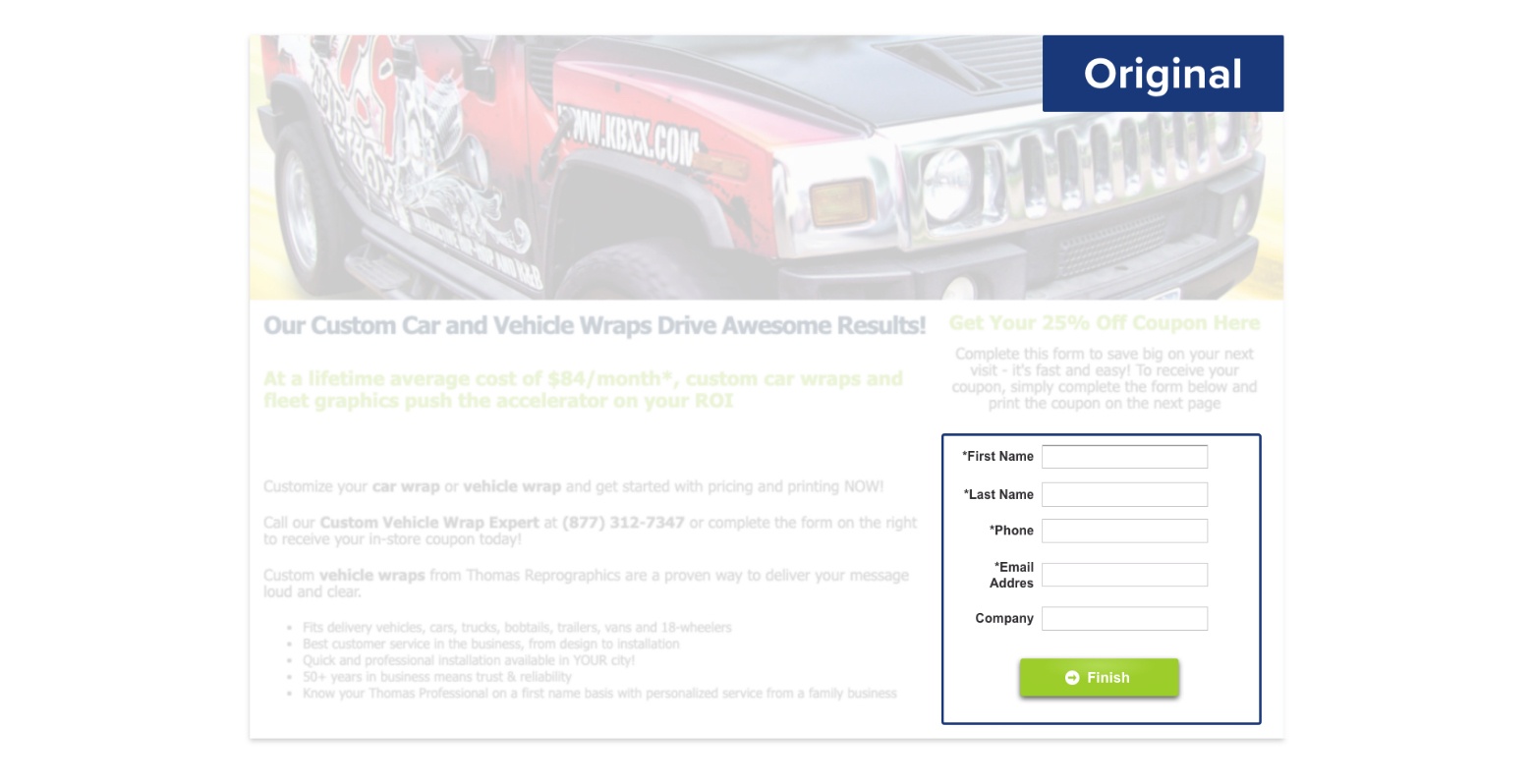

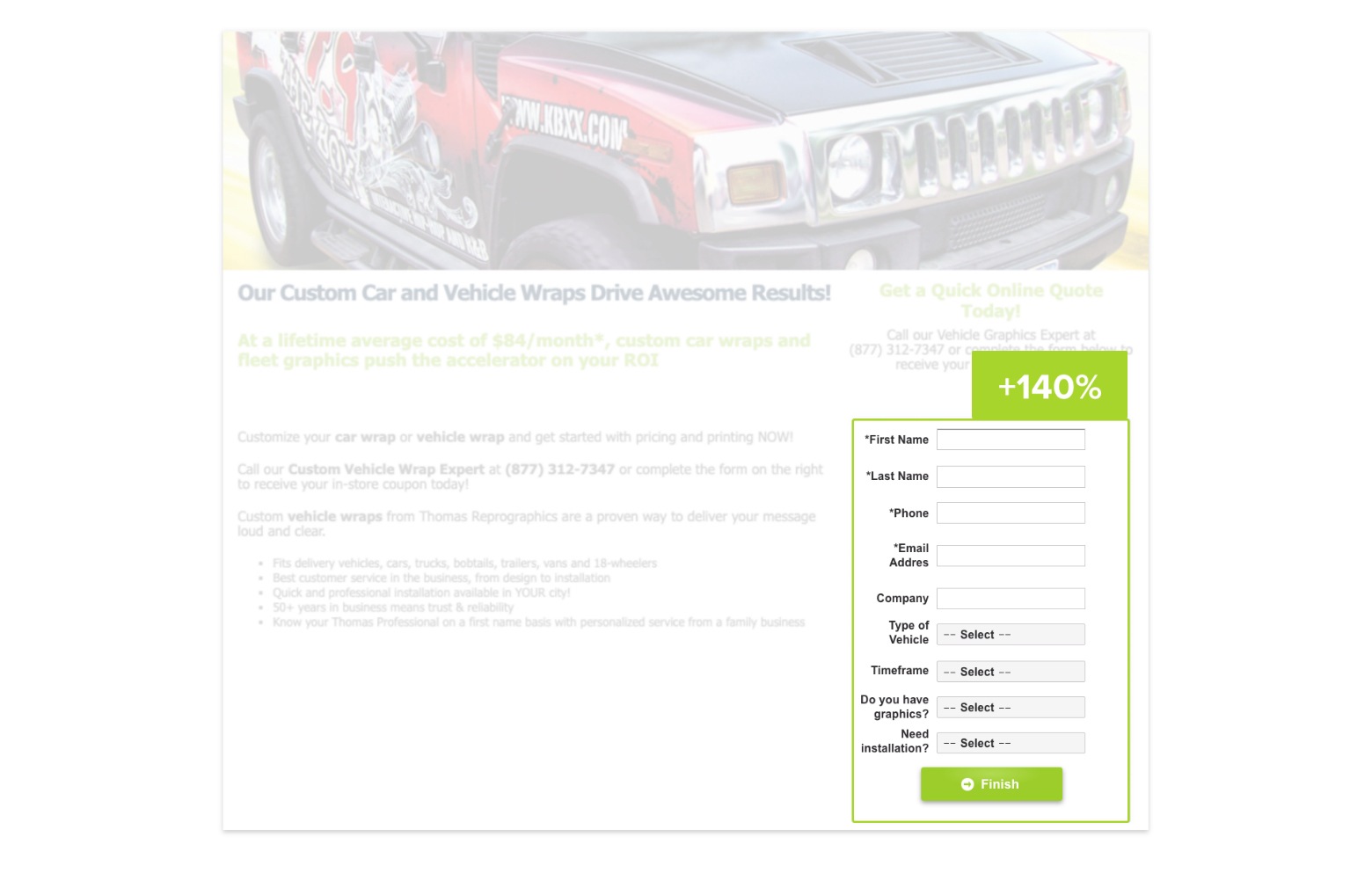

This A/B test is another example of how best-practice guides can be wrong. Optimisation specialists usually insist that shorter forms, with fewer fields, are likely to convert more effectively. In fact, this test from the car customisation company Thomas Printworks found that a longer page with more specific questions received far more completed forms.

The original page was completed by 2.2% of visitors, whilst the longer form converted 5.28% of the time. The increase represented a 140% uplift, at a Confidence Level of 90%.

Why is A/B Testing So Important in 2025?

A/B testing has one major advantage over alternative ways of optimising a website: it is based on real users. Whilst UX design, best-practice guidelines and customer journey analysis can provide hints and suggestions, real-world testing offers certainty.

- E-commerce websites use it to strengthen their conversion funnel

- Saas websites use it to improve their home page and enhance their sign-up process

- Lead generation websites use it to optimise their landing pages.

The same process is also used to help redesign websites. In 2017, for example, British Airways launched a new website. However, before releasing the new design, they trialled new versions of each webpage. By the time the finished website was published, each page had been tested over several months and thousands of visitors.

Download The Guide For Free

Download the complete Introduction to A/B testing here. The Guide is completely free and comes with a jargon buster, industry examples and a history of A/B testing

Great read, some interesting examples here. The best guide to A/B testing I’ve seen. Good job!

Glad you liked it! If you would like some more in-depth, step-by-step instructions, try this definitive guide to AB Testing.

great guide – I’ve always used A/B tests to improve my web design, but I had no idea about the history!

Thanks, Syed – it’s great to hear from a designer who’s passionate about testing!

interesting read! Imagine it will become increasingly useful as more companies digitise.

as a novice to digital marketing, this guide was extremely helpful for providing an in-depth understanding of what A/B Testing is, thank you! I’m still not sure if I have enough visitors to run my own tests though…

Fantastic, it’s great to hear that you’re getting into digital! If you want to check if you have enough traffic to run some tests, check out this article on sample sizes: http://www.convertize.com/ab-testing-sample-size/

This puts the start of A/B testing about 100 years earlier than I thought. Great stuff!