How To Do A/B Testing: Quick Start Guide 2022

Knowing how to do A/B testing can save you a lot of time and money. A/B tests compare two versions of a single webpage: “A” and “B”, showing you which version gets the most clicks, sales and sign-ups. By following some simple rules, and avoiding the most common mistakes, you can optimise your site with certainty.

How To Do A/B Testing in 2022

A/B testing has become one of the most important competitive advantages in the world of digital marketing. The most successful brands, such as Amazon or Booking.com, run automated testing programmes that conduct hundreds of tests each week. For smaller businesses, though, it can be hard to know where to start.

[sta_anchor id=”where-do-you-start-with-a-b-testing”]Where Do You Start With A/B Testing?[/sta_anchor]

A/B testing is always based around a goal. For most people, the goal is to get more sales and increase their conversion rate. In order to test which version of your website works best, you need to decide on a measurable goal, create a suitable hypothesis and target a large enough sample. You also need a platform that lets you edit your test page and allocate traffic between the different versions.

- Traffic – You can’t test your website without a large enough test-sample

- User Research – To create a hypothesis, you need some evidence about how people interact with your content.

- Hypothesis – You need a strong hypothesis about what will improve your original page

- Testing Tool – An app or optimization software to manage your traffic and collect your results

Finding a hypothesis that will generate uplift is a specialist skill. Knowing where to start with A/B tests often requires a considerable amount of user research.

[sta_anchor id=”the-best-a-b-testing-tools-for-your-industry”]Choosing an A/B Testing Tool[/sta_anchor]

Four years ago, the market for A/B testing tools was divided between two options: Optimizely and VWO. Since then, the number of options has grown rapidly. AB Tasty arrived, with a set of advanced analytics and, in 2017, Google launched its own solution: Google Optimize. In 2022 there are no less than 24 A/B testing tools competing for your attention.

To find the right tool for your website, you need to ask yourself some questions:

- What skills do you have? If your team doesn’t do web development, you need a tool with a webpage editor. If your team doesn’t have statistical expertise, you need built-in statistics.

- What kind of volume do you get? Does your traffic match your ambition? To get reliable results, you need between 10-100,000 visitors a month on each page you want to test. For multivariate testing, you need even more.

- Will You Need Other Tools? To optimise your site effectively, you may need more than one tool. For example, you might think about using heat maps, scroll maps or mini surveys.

Choosing the best A/B testing tool for your business could save you a lot of money and time, allowing you to focus on developing stronger hypotheses.

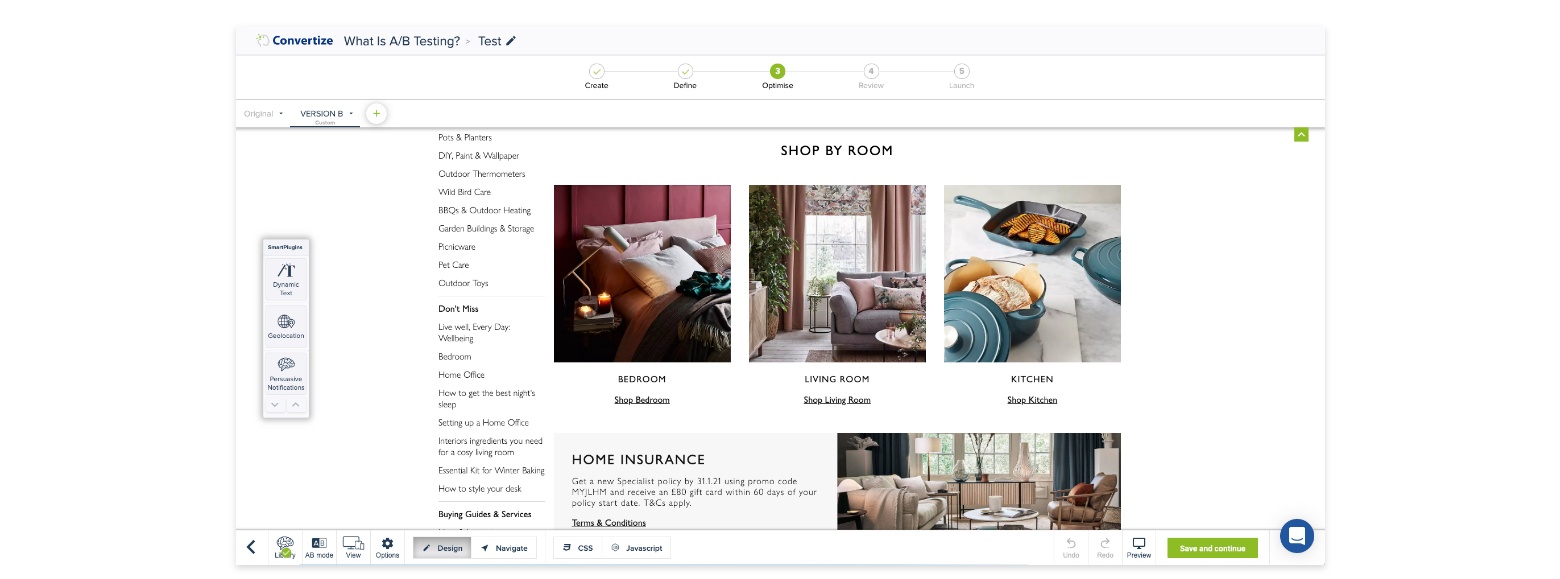

Best Value: Convertize

Convertize is a simple, user-friendly tool for business websites. Specialist features like the Autopilot and Hybrid Statistics engine allow anyone to test website content quickly and safely.

The platform provides drag-and-drop plugins that allow users to incorporate dynamic text, geolocation and persuasive notifications into their tests.

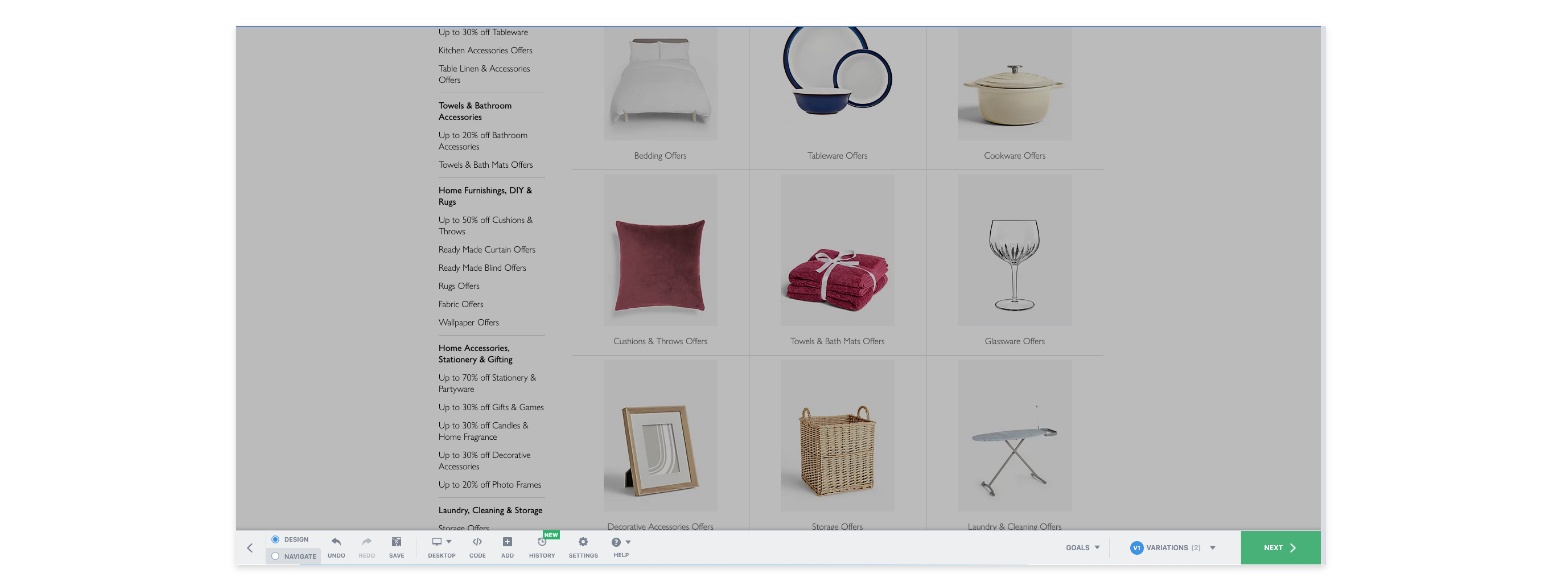

Best For Marketing Agencies: VWO

VWO combines testing features with heat maps and session recording, making it a useful all-purpose tool for CRO agencies. It also offers multivariate testing, which allows websites with 100,000+ monthly visitors to test a combination of different scenarios.

Whilst the market is full of testing platforms with drag-and-drop editors presenting themselves as VWO alternatives, the Visual Website Optimizer is still the most versatile tool for marketing agencies.

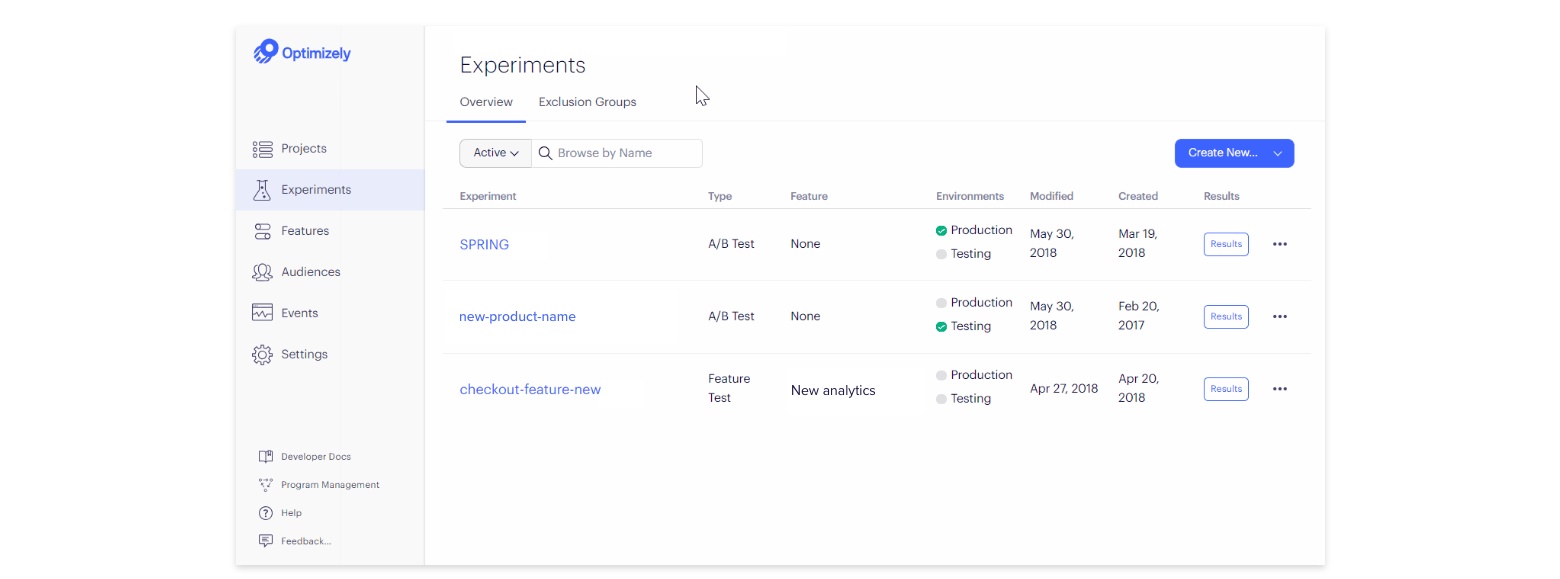

Best For App developers: Optimizely

Optimizely was one of the first solutions to make A/B testing widely available. Alongside VWO, it was a pioneer of the drag-and-drop scenario editor. However, in recent years, the platform has turned into a more complex product designed with web development agencies and SaaS platforms in mind.

Optimizely was launched with a range of pricing options, which included a popular free plan. However, the move towards specialist users with advanced technical requirements has come with a more exclusive pricing strategy. Because of this, most of the platform’s original users have moved to one of the many Optimizely alternatives.

[sta_anchor id=”how-to-do-a-b-testing-in-5-steps”]How To Do A/B Testing in 5 Steps[/sta_anchor]

Most large websites and agencies follow a continuous, step-based approach to A/B testing. This iterative strategy allows you to build on each successful test, optimising your website systematically.

Step 1. Funnel Analysis

Before you’ve even begun to think about what to test, you need to find out where your website can be improved. Analytics tools like Google Analytics show you how visitors are moving through your website. By examining this data, and finding weaknesses in your “Conversion Funnel”, you can identify where the changes need to be made.

Step 2. Creating And Prioritising Your Hypotheses

There are a wide range of CRO tools that can help you form your hypotheses. These range from heat map tools, such as Hotjar (from $29/month) and CrazyEgg (from $24/month), to survey tools like Typeform (from $30/month). A good A/B testing hypothesis needs to be clearly defined and based on your data. It should also be closely related to your KPIs and have a good chance of producing results.

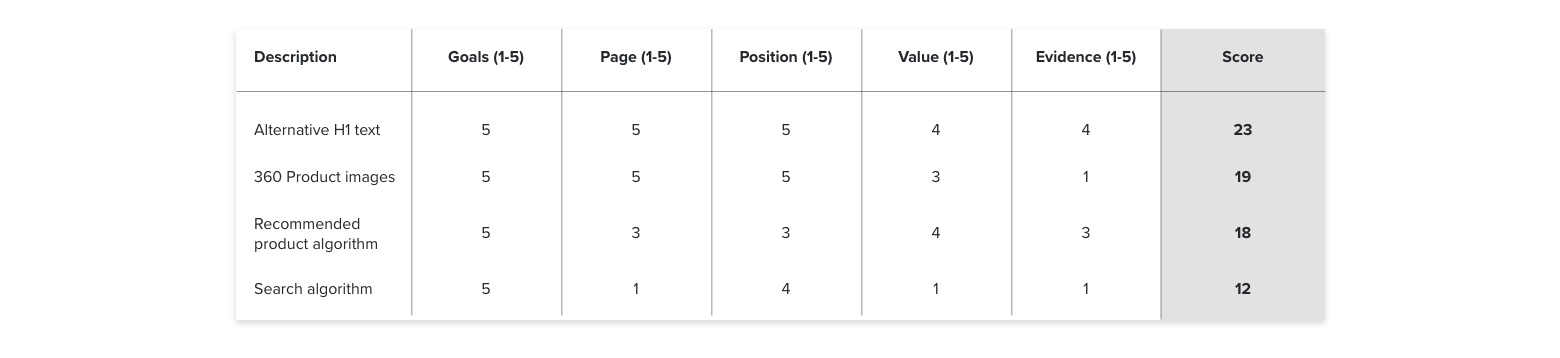

Scoring your hypotheses against a priority checklist will help you to decide which ideas to test first. Although the scores you give in each category are subjective, a checklist like this can help you to focus on goal-orientated factors rather than superficial concerns.

- Goals – How closely does your hypothesis relate to your KPIs?

- Page – Are the target pages a priority?

- Position – Will the change take place above or below the fold, and how visible is it?

- Value – Will the treatment have an impact on the value you offer a customer, or is it just cosmetic?

- Evidence – Are there previous examples of a treatment like this working?

A combined score of over 20 suggest that your hypothesis is very strong and has a good chance of working.

Step 3. Designing Your Experiment

It is important to be precise about the settings for your experiment. Before launching a test, you need to decide on a goal, which pages to target and how you will divide your traffic. Most A/B testing tools use a “Multi-Armed Bandit” to divide visitors between your pages.

What is an A/B testing Multi-Armed Bandit?

A Multi-Armed Bandit is an algorithm that decides how to allocate your traffic (between your test pages). Usually, it sends more of your traffic to the best-performing pages.

You also need to decide on the “Confidence Level” you expect to reach. A 95% Confidence Level is the standard for most agencies.

What is Confidence Level?

A Confidence Level is the smallest probability (given as a percentage) you are willing to accept that your results are not the result of random variation. Setting a Confidence Level of 95% means that there is only a 5% chance (1/20) that your results were produced by chance.

Step 4. Running Your Experiment

During your experiment, it is important that you avoid biasing your results by interfering with the traffic that reaches your test page. Using paid advertising to increase your visitors will change the quality of your leads, giving a false impression about which page version is best. Similarly, it’s best not to edit your scenarios or settings whilst the test is running.

One of the most common problems that first-timers encounter is not knowing when to end a test. Statistical significance may not be enough to guarantee that the results are trustworthy, and not every test has to run until it reaches 95% Confidence Level.

Step 5 – Interpreting Your Results

Even if you achieve an impressive and statistically significant uplift, it is still a good idea to change things gradually. This is because website changes often have unexpected effects. For example, version B might lead visitors to make a purchase more frequently – but it might also reduce the average amount people spend.

[sta_anchor id=”understanding-a-b-testing-statistics”]Understanding A/B Testing Statistics[/sta_anchor]

Most modern A/B testing tools do the calculations for you; so there’s no need to dig through your statistics. However, it can be useful to understand the principles involved in A/B testing statistics, since it can help you decide what kind of test to use, and when to double-check your platform.

The most important concept for interpreting your results is “statistical significance” (the probability that the difference between the conversion rates recorded for version “A” and version “B” is not due to random chance.) A test is considered “significant” when it reaches a pre-defined “confidence level” (95% confidence is the standard setting for an A/B test). This means that, assuming no real effect has taken place, you could expect to see results of a similar magnitude 5% of the time (1 in 20).

An A/B testing tool will tell you when your tests have reached statistical significance at the confidence level you have set. It will also tell you the probability of your results representing a false positive. To be even more sure, you can check your AB test’s significance by entering the results into an A/B testing calculator.

How Much Traffic Do You Need To Do A/B Testing?

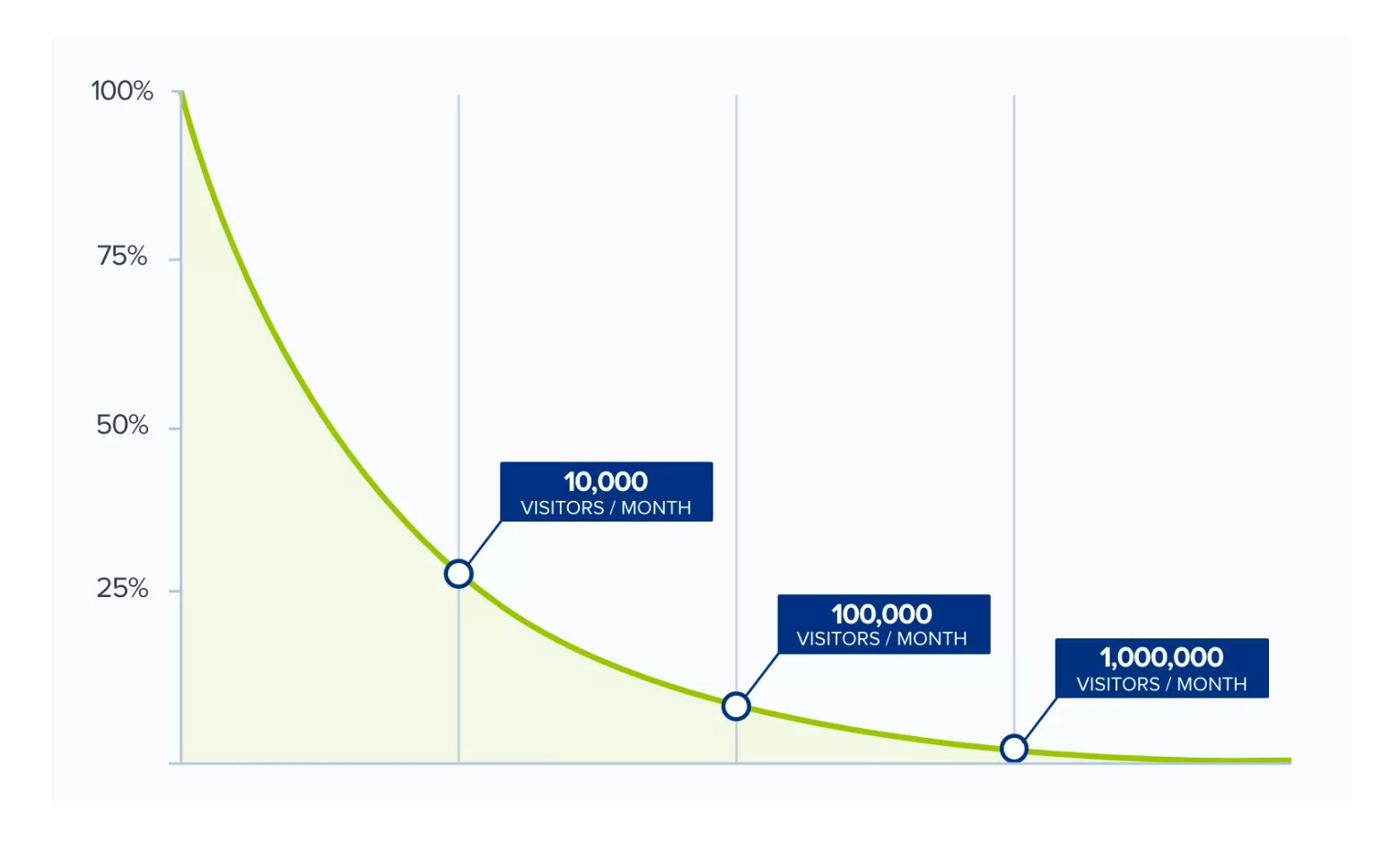

Finding a way to get Uplift is not easy, and achieving statistical significance is downright difficult. That’s why you need to think about your sample size before you launch an experiment. This simple A/B testing sample size chart can help give you a sense of how many visitors you will need to produce significant results within a 30-day period.

Most first-time testers are surprised at how large their sample needs to be to produce reliable results. As the chart shows, a more significant uplift (over 10%, for example) reduces the sample size required to reach statistical significance.

1. Fear Factor zone

With less than 10,000 visitors a month, A/B testing will be very unreliable because it’s necessary to improve the conversion rate by more than 30% in order to have a “winning” variation within 30 days.

2. Thrilling zone

With between 10,000 and 100,000 visitors a month, A/B testing can be a real challenge as an improvement in conversion rate of at least 9% is needed to be reliable.

3. Exciting zone

With between 100,000 and 1,000,000 visitors a month, we’re entering the “Exciting” zone: it’s necessary to improve the conversion rate by between 2% and 9%, depending on the number of visitors.

4. Safe zone

Beyond one million visitors a month, we’re in the “Safe” zone, which allows us to carry out a number of iterative tests.

[sta_anchor id=”common-a-b-testing-mistakes”]The 3 Most Common Mistakes[/sta_anchor]

A/B testing can be tricky, and it’s all too easy to invest time and money without producing any results. These are some of the most common A/B testing mistakes.

1. Prioritising the wrong things

You can’t test everything, so you should prioritise your tests. The best way to prioritise your tests is to make a priority table like the one described in “How To Do A/B Testing” Step 2. You should also bear in mind the following things:

- Potential: the potential improvement from a successful test

- Importance: the volume of traffic on the tested page and the proportion of your conversions in which it is involved

- Resources: What is needed to run the test and apply the results

- Certainty: The strength of the evidence that your test will produce a positive result

2. Testing Too Many Things at once

An A/B test involves comparing A with B. In other words, testing one thing at a time. A common mistake is to create too many scenarios within the same test. Running too many alternative versions will extend the time each test takes to produce reliable results. Again, this comes down to the question of sample size.

If you are running a normal A/B test, you should test one element at a time and create a maximum of 3 variations. Each additional scenario increases the sample size required to reach significance, without really improving the insight you produce.

3. Stopping The Test Too Early

“Too early” simply means that you stop your test before the results are reliable. This is one of the most familiar A/B testing mistakes, and possibly the most important to avoid. It is easy to commit a Type I or Type II statistical error simply by responding to your results prematurely.

Most CRO agencies have a strict “No-Peeking” rule when running a test. That way, nobody is tempted to end a test before Statistical Significance is reached.

[sta_anchor id=”a-b-testing-jargon-buster”]Frequently Asked Questions[/sta_anchor]

Does A/B Testing Affect SEO?

Google clarified its position regarding A/B Testing in an article published on its blog. The important points to remember are:

- Use “Canonical Content” Tags. Search engines find it difficult to rank content when it appears in two places (“duplicate content”). As a result, web crawlers penalise duplicate content and reduce its SERP ranking. When two URLs displaying alternate versions of a page are live (during A/B tests, for example) it is important to specify which of them should be ranked. This is done by attaching a rel=canonical tag to the alternative (“B”) version of your page, directing web crawlers to your preferred version.

- Do Not Use Cloaking. In order to avoid penalties for duplicate content, some early A/B testers resorted to blocking Google’s site crawlers on one version of a page. However, this technique can lead to SEO penalties. Showing one version of content to humans and another to Google’s site indexers is against Google’s rules. It is important not to exclude Googlebot (by editing a page’s robots.txt file) whilst conducting A/B tests.

- Use 302 redirects. Redirecting traffic is central to A/B testing. However, a 301 redirect can trick Google into thinking that an “A” page is old content. In order to avoid this, traffic should be redirected using a 302 link (which indicates a temporary redirect).

The good news is that, by following these guidelines, you can make sure your tests have no negative SEO impact on your site. The better news is that If you are using A/B testing software, these steps will be followed automatically.

How Can I Do A/B Testing Without Slowing Down My Page?

A/B testing software can reduce loading speed due to the way in which it hosts competing versions of a page. A testing tool can create scenarios in two ways:

- Server-side – This form of A/B testing is faster and more secure. However, it is also expensive and more complicated to implement.

- Client-side – This approach uses Javascript code that makes the changes directly in the visitor’s browser. Client-side testing can create a loading delay of a few fractions of a second. The loading delay traditionally associated with client-side software is known as the “flickering effect”.

Most A/B testing software operates on a client-side basis. This is to make editing a site as easy as possible. In order to reduce the impact of testing on a page, the best A/B testing solutions have found ways to speed up page loading.

How Do You Choose What To A/B Test and When?

You can calculate the number of experiments you can run in a single year by entering the following details into a sample size calculator and finding out the number of days required for each test:

- Your page’s weekly traffic

- Your page’s current conversion rate

- The uplift you can reasonably expect to achieve

Once you know how many tests you can run on which pages, you can put together a full testing strategy for your website. You should start by focusing on the pages that will take the shortest amount of time to produce significant results, as these will tend to be the ones that provide you with the most “low hanging fruit”.

Low-Hanging Fruit vs Optimization

There are two kinds of A/B testing strategy, and the one you choose should depend on where you are in the optimisation process. Optimising a website that has a very low conversion rate allows you to take more risks with your edits. You may even decide to try different kinds of offer or value propositions.

For later-stage tests, when you are optimising for more marginal gains, each test should focus on a more discrete variation. These are the kind of experiments favoured by large companies like Amazon and Google.

Great and Optimized ways to do A/B testing. Even, after reading all the faqs, I am clear with all my doubts. Knowledge about different types of traffic zone that are needed for doing A/B testing was new one.

Thank you for sharing!!